Supervised Learning Tutorial¶

Note

This tutorial will cover how to use Rasa Core directly from python. We will dive a bit deeper into the different concepts and overall structure of the library. You should already be familiar with the terms domain, stories, and have some knowledge of NLU.

Goal¶

In this example we will create a restaurant search bot, by training

a neural net on example conversations. A user can contact the bot with something

close to "I want a mexican restaurant!" and the bot will ask more details

until it is ready to suggest a restaurant.

Let’s start by creating a new project directory:

mkdir restaurantbot && cd restaurantbot

After we are done creating the different files, the final structure will look like this:

restaurantbot/

├── data/

│ ├── babi_stories.md # dialogue training data

│ └── franken_data.json # nlu training data

├── restaurant_domain.yml # dialogue configuration

└── nlu_model_config.json # nlu configuration

All example code snippets assume you are running the code from within that project directory.

1. Creating the Domain¶

Our restaurant domain contains a couple of slots as well as a number of

intents, entities, utterance templates and actions. Let’s save the following

domain definition in restaurant_domain.yml:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 | slots:

cuisine:

type: text

people:

type: text

location:

type: text

price:

type: text

info:

type: text

matches:

type: list

intents:

- greet

- affirm

- deny

- inform

- thankyou

- request_info

entities:

- location

- info

- people

- price

- cuisine

templates:

utter_greet:

- "hey there!"

utter_goodbye:

- "goodbye :("

- "Bye-bye"

utter_default:

- "default message"

utter_ack_dosearch:

- "ok let me see what I can find"

utter_ack_findalternatives:

- "ok let me see what else there is"

utter_ack_makereservation:

- "ok making a reservation"

utter_ask_cuisine:

- "what kind of cuisine would you like?"

utter_ask_helpmore:

- "is there anything more that I can help with?"

utter_ask_howcanhelp:

- "how can I help you?"

utter_ask_location:

- "in which city?"

utter_ask_moreupdates:

- "anything else you'd like to modify?"

utter_ask_numpeople:

- "for how many people?"

utter_ask_price:

- text: "in which price range?"

buttons:

- title: "cheap"

payload: "cheap"

- title: "expensive"

payload: "expensive"

utter_on_it:

- "I'm on it"

actions:

- utter_greet

- utter_goodbye

- utter_default

- utter_ack_dosearch

- utter_ack_findalternatives

- utter_ack_makereservation

- utter_ask_cuisine

- utter_ask_helpmore

- utter_ask_howcanhelp

- utter_ask_location

- utter_ask_moreupdates

- utter_ask_numpeople

- utter_ask_price

- utter_on_it

- bot.ActionSearchRestaurants

- bot.ActionSuggest

|

You can instantiate that Domain like this:

from rasa_core.domain import TemplateDomain

domain = TemplateDomain.load("restaurant_domain.yml")

Our Domain has clearly defined slots (in our case criterion for target restaurant) and intents

(what the user can send). It also requires templates to have text to use to respond given a certain action.

Each of these actions must either be named after an utterance (dropping the utter_ prefix)

or must be a module path to an action. Here is the code for one the two custom actions:

from rasa_core.actions import Action

class ActionSearchRestaurants(Action):

def name(self):

return 'search_restaurants'

def run(self, dispatcher, tracker, domain):

dispatcher.utter_message("here's what I found")

return []

The name method is to match up actions to utterances, and the run command is run whenever the action is called. This

may involve api calls or internal bot dynamics.

But a domain alone doesn’t make a bot, we need some training data to tell the bot which actions it should execute at what point in time. So lets create some stories!

2. Creating Training Data¶

The training conversations come from the bAbI dialog task . However, the messages in these dialogues are machine generated, so we will augment this dataset with real user messages from the DSTC dataset. Lucky for us, this dataset is also in the restaurant domain.

Note

the babi dataset is machine-generated, and there are a LOT of dialogues in there. There are 1000 stories in the training set, but you don’t need that many to build a useful bot. How much data you need depends on the number of actions you define, and the number of edge cases you want to support. But a few dozen stories is a good place to start.

We have converted the bAbI dialogue training set into the Rasa stories format, you

can download the stories training data from GitHub.

Download that file, and store it in babi_stories.yml. Here’s an example

conversation snippet:

1 2 3 4 5 6 7 8 9 10 11 | ## story_07715946

* _greet[]

- action_ask_howcanhelp

* _inform[location=rome,price=cheap]

- action_on_it

- action_ask_cuisine

* _inform[cuisine=spanish]

- action_ask_numpeople

* _inform[people=six]

- action_ack_dosearch

...

|

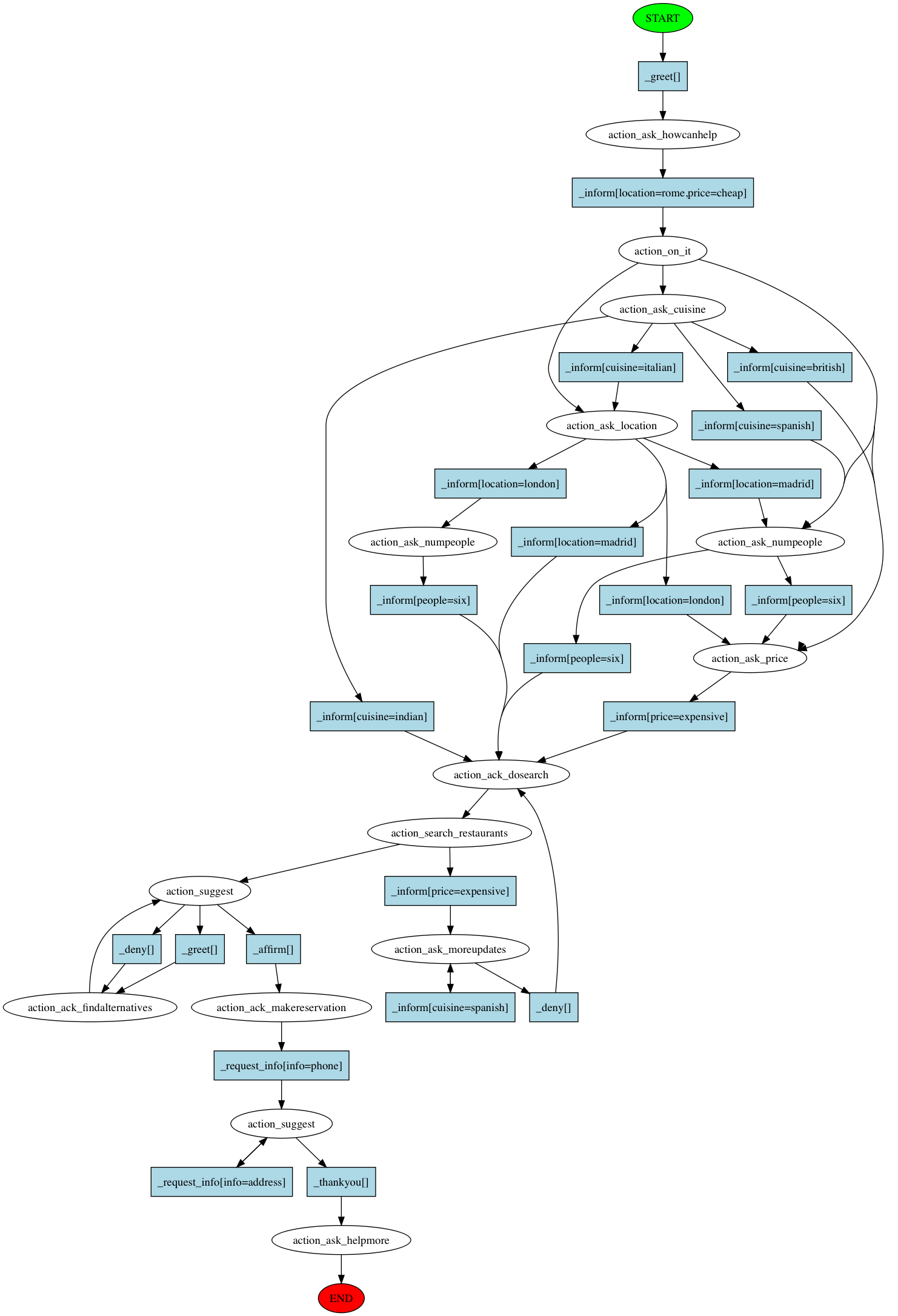

See Stories - The Training Data to get more information about the Rasa Core data format. We can also visualize that training data to generate a graph which is similar to a flow chart:

The graph shows all of the actions executed in the training data, and the user messages (if any) that occurred between them. As you can see, flow charts get complicated quite quickly. Nevertheless, they can be a helpful tool in debugging a bot. More information can be found in Visualization of Stories.

3. Training your bot¶

We can go directly from data to bot with only a few steps:

- train a Rasa NLU model to extract intents and entities. Read more about that in the NLU docs.

- train a dialogue policy which will learn to choose the correct actions

- set up an agent which has both model 1 and model 2 working together to go directly from user input to action

We will go through these steps one by one.

NLU model¶

To train our Rasa NLU model, we need to create a configuration first in config_nlu.json:

1 2 3 4 5 6 7 8 9 10 11 12 13 | {

"pipeline": [

"nlp_spacy",

"tokenizer_spacy",

"intent_featurizer_spacy",

"intent_classifier_sklearn",

"ner_crf",

"ner_synonyms"

],

"path" : "./models",

"project": "nlu",

"data" : "./data/franken_data.json"

}

|

We can train the NLU model using

python -m rasa_nlu.train -c nlu_model_config.json --fixed_model_name current

or using python code

1 2 3 4 5 6 7 8 9 10 11 | def train_nlu():

from rasa_nlu.converters import load_data

from rasa_nlu.config import RasaNLUConfig

from rasa_nlu.model import Trainer

training_data = load_data('data/franken_data.json')

trainer = Trainer(RasaNLUConfig("nlu_model_config.json"))

trainer.train(training_data)

model_directory = trainer.persist('models/nlu/', fixed_model_name="current")

return model_directory

|

You can learn all about Rasa NLU starting from the

github repository.

What you need to know though is that interpreter.parse(user_message) returns

a dictionary with the intent and entities from a user message.

Training NLU takes approximately 18 seconds on a 2014 MacBook Pro.

Dialogue Policy¶

Now our bot needs to learn what to do in response to these messages. We do this by training one or multiple Rasa Core policies.

For this bot, we came up with our own policy. Here are the gory details:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | class RestaurantPolicy(KerasPolicy):

def model_architecture(self, num_features, num_actions, max_history_len):

"""Build a Keras model and return a compiled model."""

from keras.layers import LSTM, Activation, Masking, Dense

from keras.models import Sequential

n_hidden = 32 # size of hidden layer in LSTM

# Build Model

batch_shape = (None, max_history_len, num_features)

model = Sequential()

model.add(Masking(-1, batch_input_shape=batch_shape))

model.add(LSTM(n_hidden, batch_input_shape=batch_shape))

model.add(Dense(input_dim=n_hidden, output_dim=num_actions))

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

logger.debug(model.summary())

return model

|

Note

Remember, you do not need to create your own policy. The default policy setup using a memoization policy and a Keras policy works quite well. Nevertheless, you can always fine tune them for your use case. Read Plumbing - How it all fits together for more info.

This policy extends the Keras Policy modifying the ML architecture of the

network. The parameters max_history_len and n_hidden may be altered

dependent on the task complexity and the amount of data one has.

max_history_len is important as it is the amount of story steps the

network has access to to make a classification.

Now let’s train it:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | def train_dialogue(domain_file="restaurant_domain.yml",

model_path="models/dialogue",

training_data_file="data/babi_stories.md"):

agent = Agent(domain_file,

policies=[MemoizationPolicy(), RestaurantPolicy()])

agent.train(

training_data_file,

max_history=3,

epochs=100,

batch_size=50,

augmentation_factor=50,

validation_split=0.2

)

agent.persist(model_path)

return agent

|

This code creates the policies to be trained and uses the story training data to train and persist a model. The goal of the trained policy is to predict the next action, given the current state of the bot.

To train it from the cmd, run

python bot.py train-dialogue

to get our trained policy.

Training the dialogue model takes roughly 12 minutes on a 2014 MacBook Pro

4. Using the bot¶

Now we’re going to glue some pieces together to create an actual bot.

We instantiate the policy, and an Agent instance,

which owns an Interpreter, a Policy, and a Domain.

We put the NLU model into an Interpreter and then put that into an Agent.

We will pass messages directly to the bot, but this is just for this is just for demonstration purposes. You can look at how to build a command line bot and a facebook bot by checking out the Connecting to messaging & voice platforms.

from rasa_core.interpreter import RasaNLUInterpreter

from rasa_core.agent import Agent

agent = Agent.load("models/dialogue", interpreter=RasaNLUInterpreter("models/nlu/current"))

We can then try sending it a message:

>>> agent.handle_message("_greet")

[u'hey there!']

And there we have it! A minimal bot containing all the important pieces of Rasa Core.

If you want to handle input from the command line (or a different input channel) you need handle that channel instead of handling messages directly, e.g.:

from rasa_core.channels.console import ConsoleInputChannel

agent.handle_channel(ConsoleInputChannel())

In this case messages will be retrieved from the command line because we specified

the ConsoleInputChannel. Responses are printed to the command line as well. You

can find a complete example of how to load an agent and chat with it on the

command line in examples/restaurantbot/run.py.